Highlights

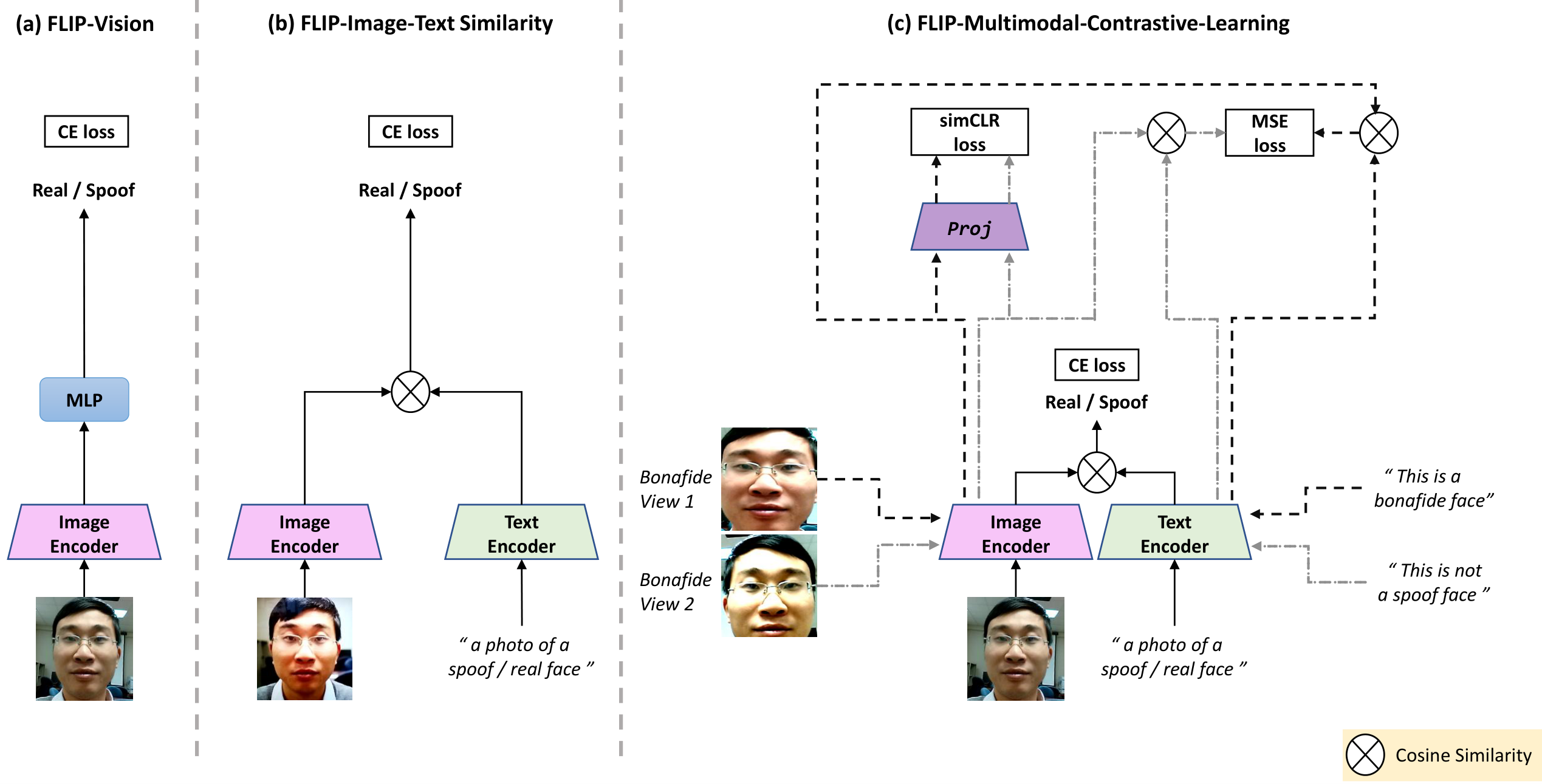

- We show that direct finetuning of a multimodal pre-trained ViT (e.g., CLIP image encoder) achieves better FAS generalizability without any bells and whistles.

- We propose a new approach for robust cross-domain FAS by grounding the visual representation using natural language semantics. This is realized by aligning the image representation with an ensemble of text prompts (describing the class) during finetuning.

- We propose a multimodal contrastive learning strategy, which enforces the model to learn more generalized features that bridge the FAS domain gap even with limited training data. This strategy leverages view-based image self-supervision and view-based cross-modal image-text similarity as additional constraints during the learning process.

- Extensive experiments on three standard protocols demonstrate that our method significantly outperforms the state- of-the-art methods, achieving better zero-shot transfer performance than five-shot transfer of “adaptive ViTs”.

Results

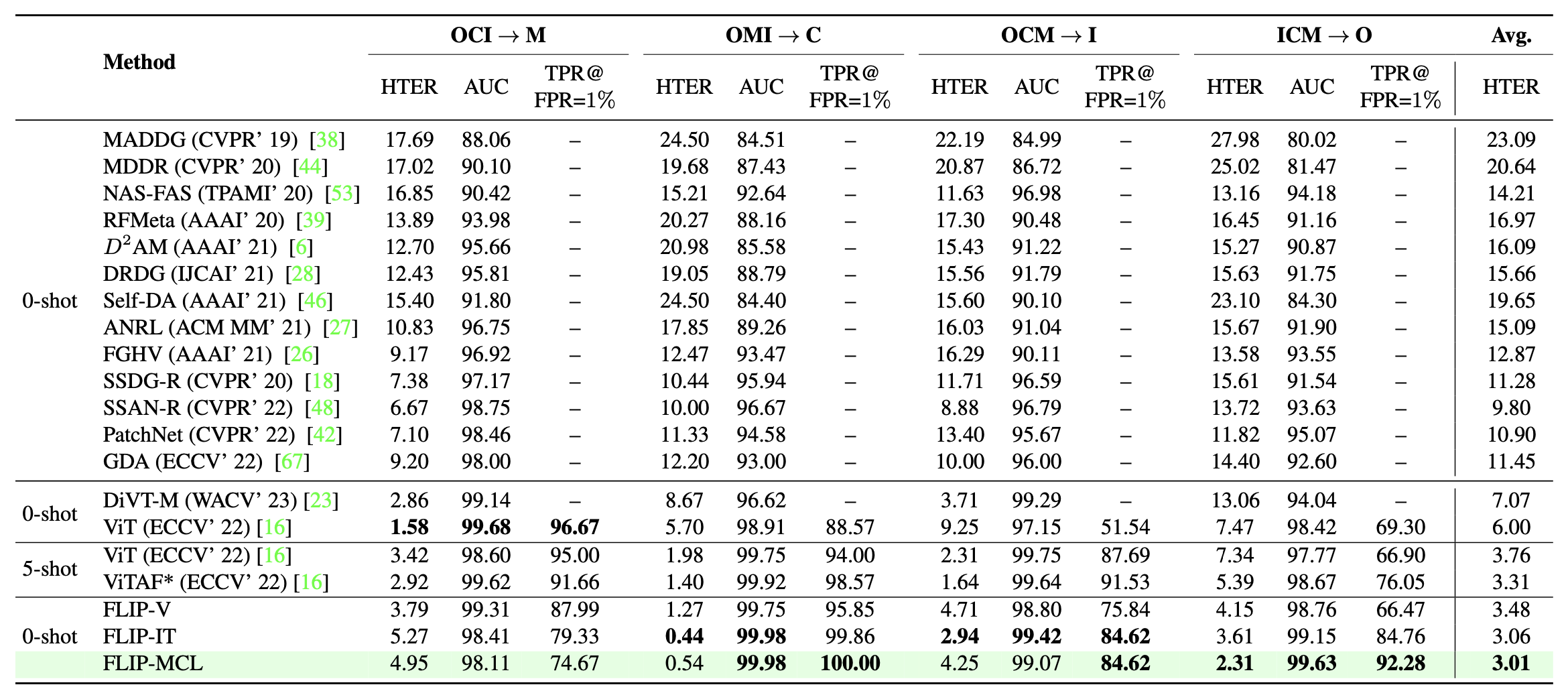

Cross Domain performance in Protocol 1

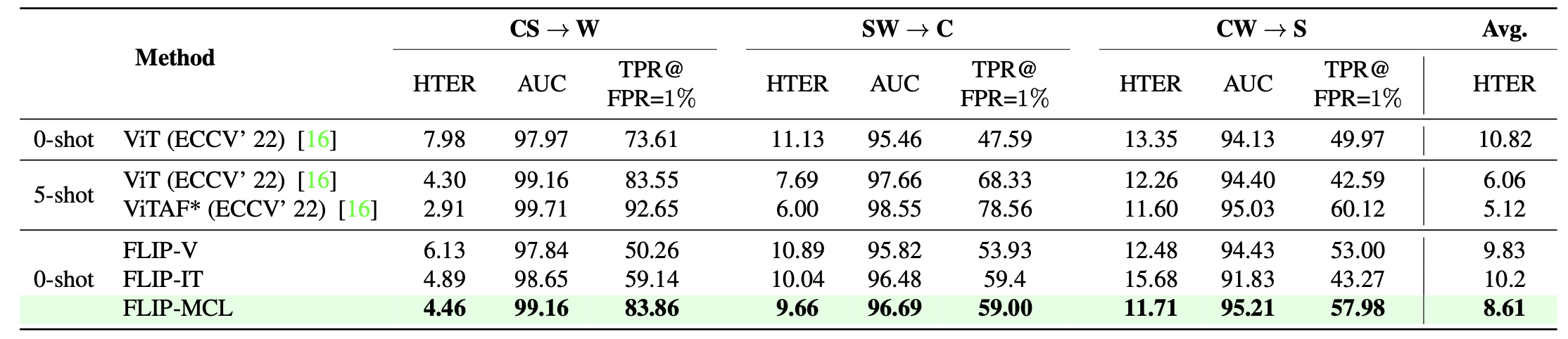

Cross Domain performance in Protocol 2

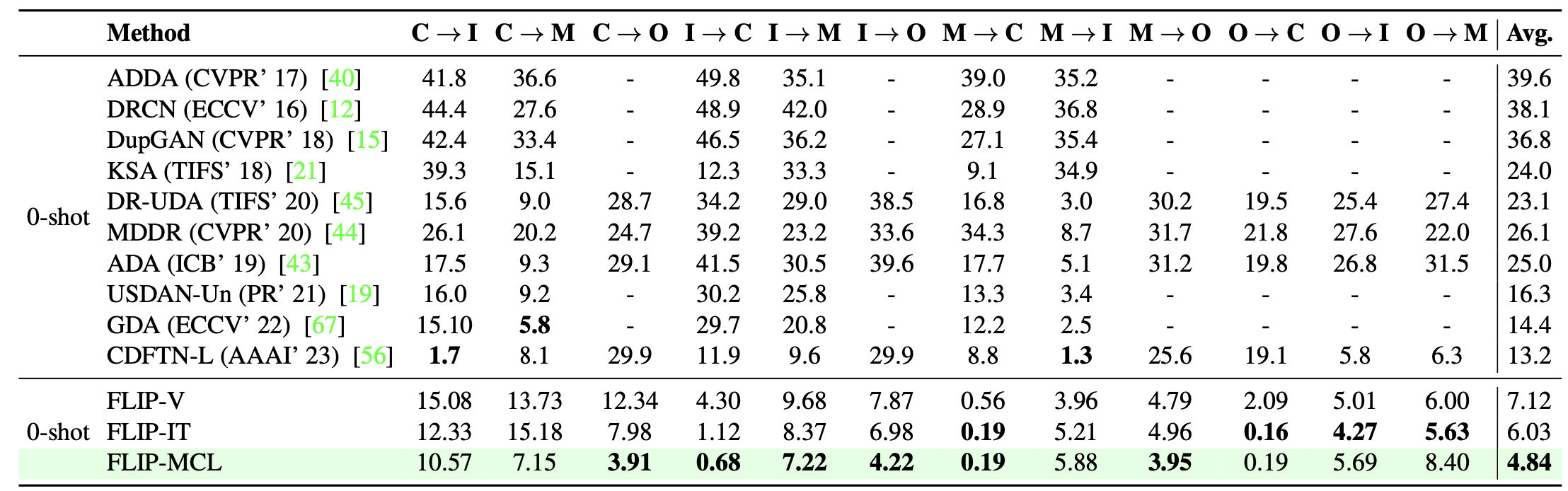

Cross Domain performance in Protocol 3

Visualizations

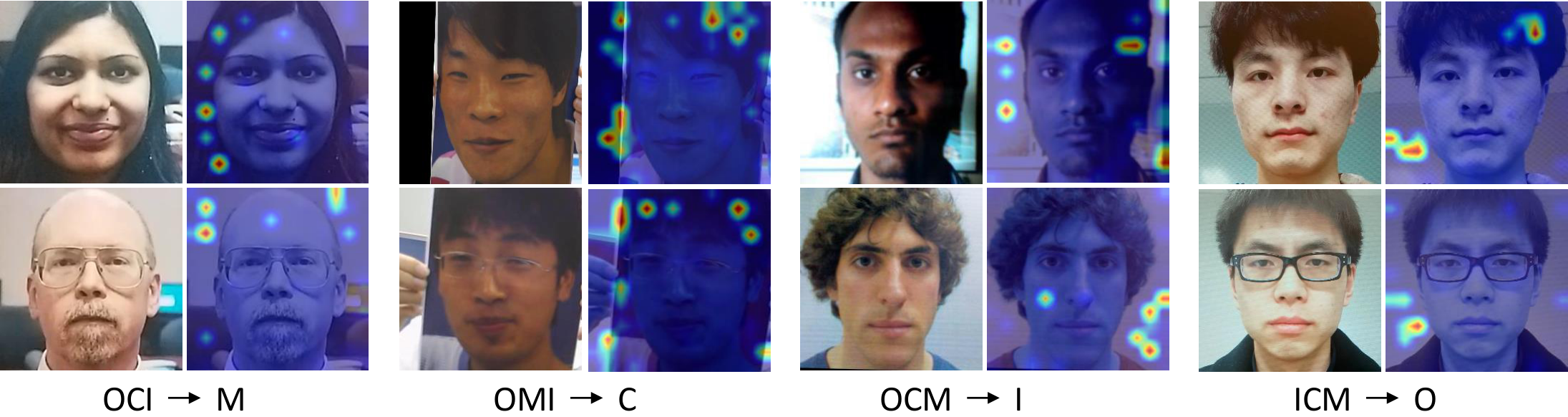

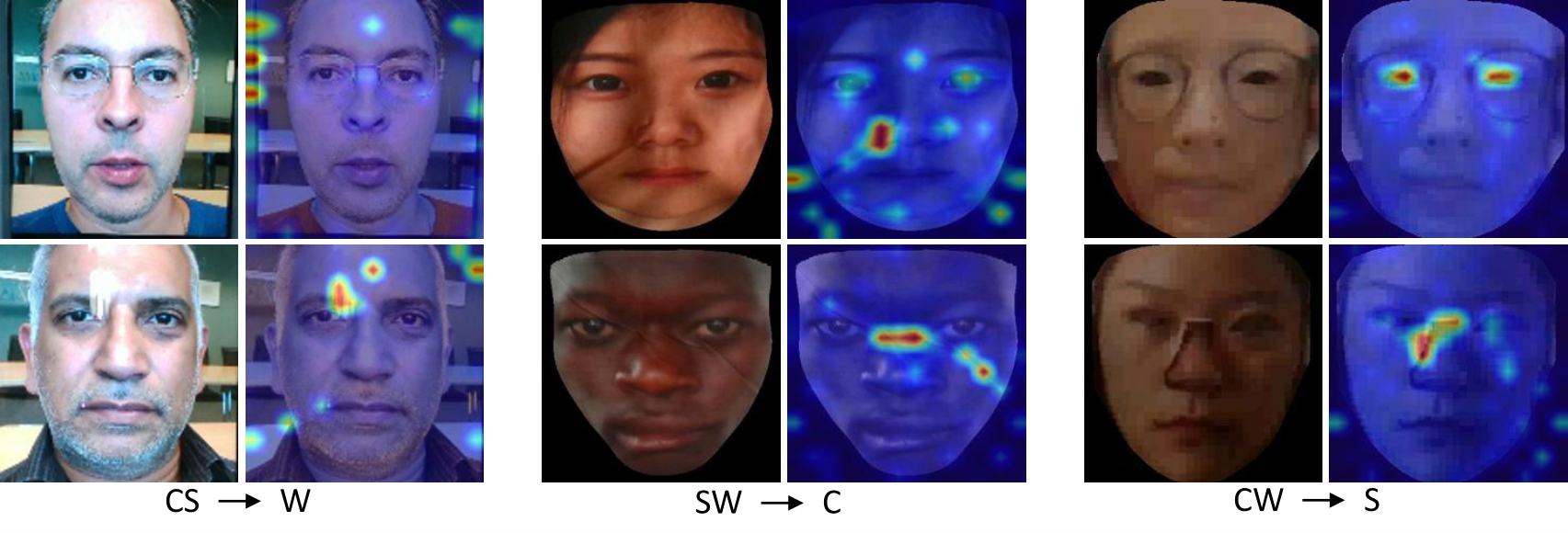

Attention Maps

Attention Maps on the spoof samples in MCIO datasets: Attention highlights are on the spoof-specific clues such as paper texture (M), edges of the paper (C), and moire patterns (I and O).

Attention Maps on the spoof samples in WCS datasets: Attention highlights are on the spoof-specific clues such as screen edges/screen reflection (W), wrinkles in printed cloth (C), and cut-out eyes/nose (S).

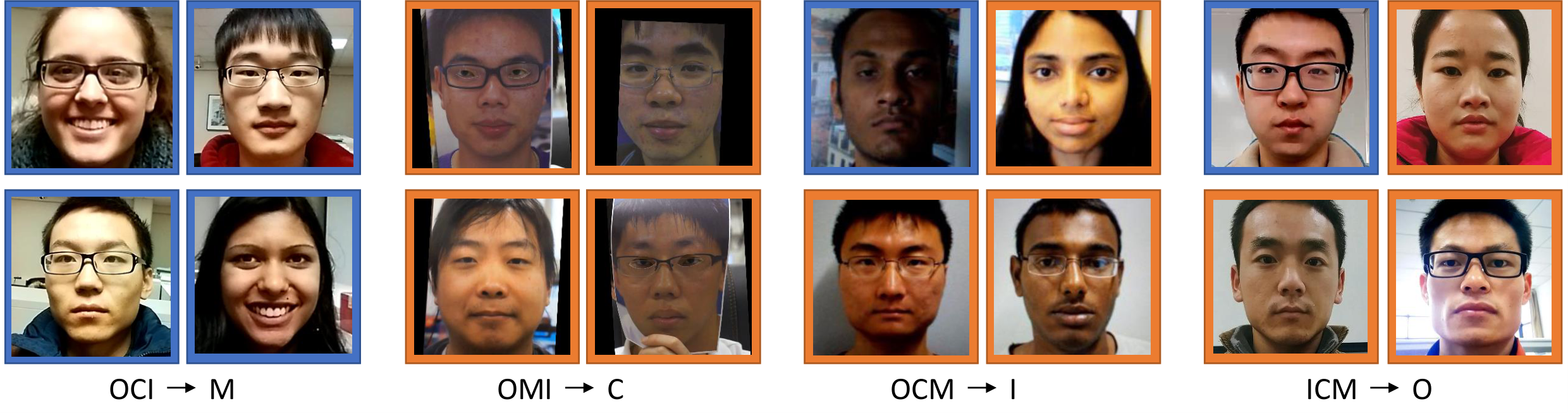

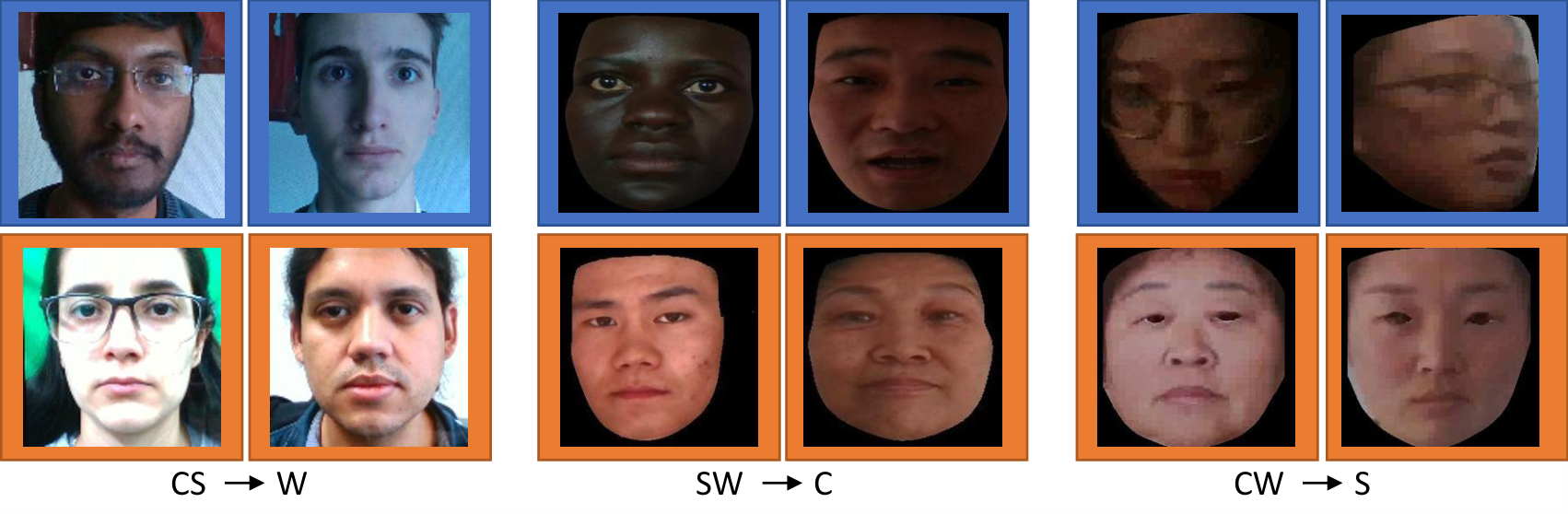

Mis-Classified Examples

Blue boxes indicate real faces mis-classified as spoof. Orange boxes indicate spoof faces mis-classified as real.

Mis-classified examples in MCIO datasets.

Mis-classified examples in WCS datasets.

BibTeX

@InProceedings{Srivatsan_2023_ICCV,

author = {Srivatsan, Koushik and Naseer, Muzammal and Nandakumar, Karthik},

title = {FLIP: Cross-domain Face Anti-spoofing with Language Guidance},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {19685-19696}

}